Monday, Oct 10

Julia is a new programming language for technical computing and shows a lot of promise when it comes to being a useful language to replace Python as the standard for scientific computing.

One particularly nice feature ist that Julia supports parallelism, both through manual launching of processes from within your code and by using a parallel region syntax which resembles OpenMP. The latter is particularly interesting because it is:

- Very simple.

- Works between processes rather than in a shared memory context like OpenMP which means it is possible to spread workers across multiple nodes.

An example looks something like this (from the PI example):

sum = @parallel (+) for i = 1:numsteps

x = (i - 0.5) * slice

(4.0/(1.0 + x^2))

end

What this does is define a parallel for loop with the result stored in sum, using + as the reduction.

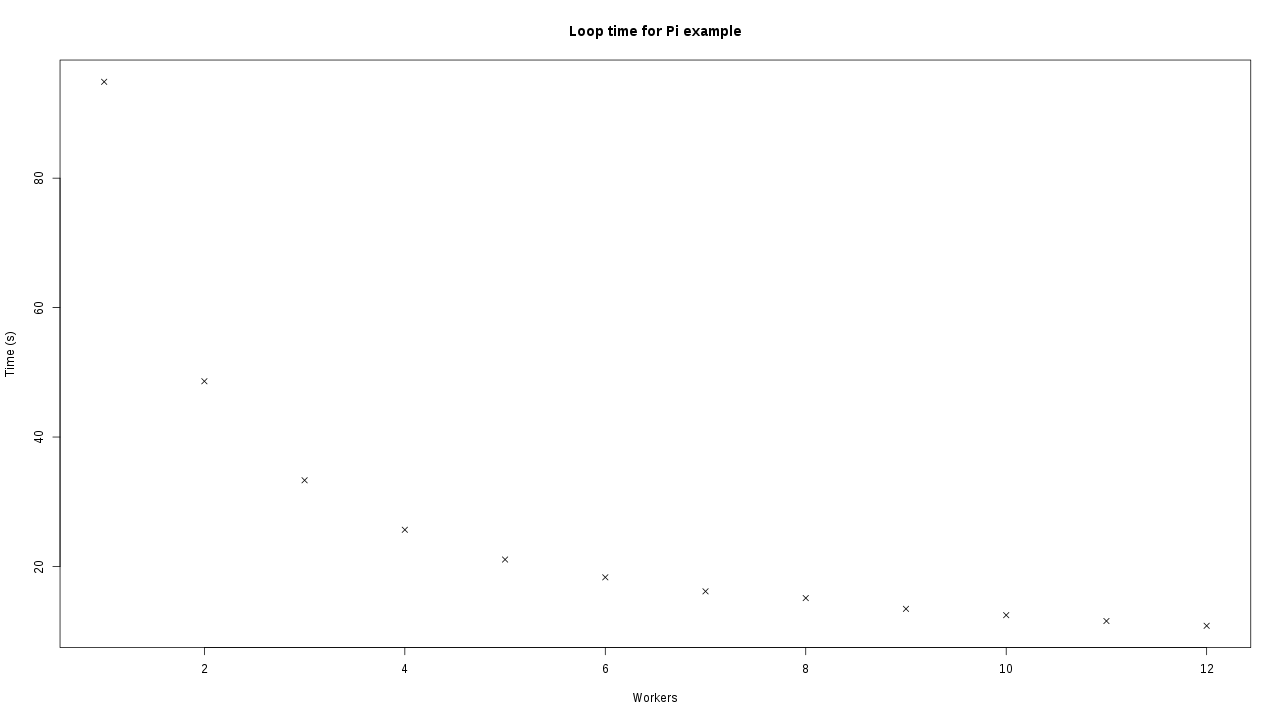

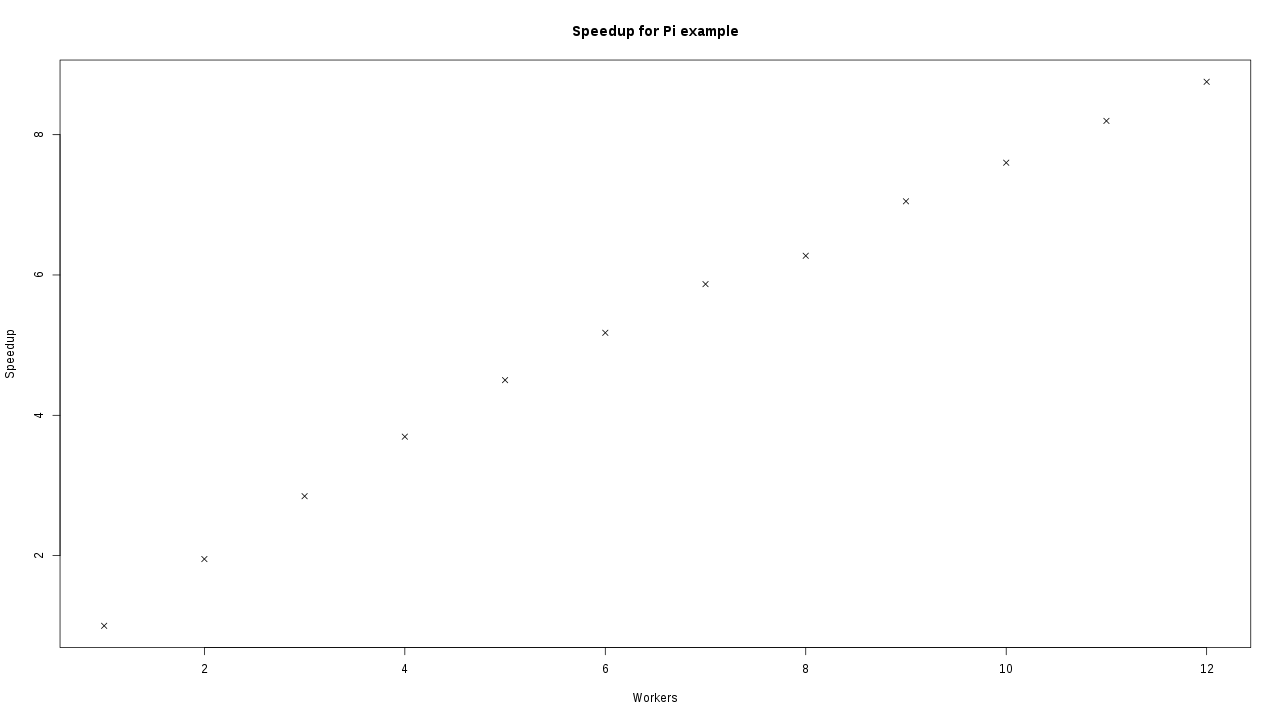

Here are timing and scaling graphs for the PI code running within a node on Legion:

One thing to be aware of is inter-node traffic is IP, so on Legion will go over the ethernet interface. On Grace where there is IP over IB it’ll go over the Infiniband interface.

Now, Julia provides a mechanism for starting some number (N) parallel workers when it starts and even passing it a machinefile.

$ julia --machinefile=$TMPDIR/machines -p 4 ./pi.jl

This should start 4 worker processes (the main process doesn’t do any work) using SSH over the machines listed in the normal machinefile format (if you are only launching on the local machine you can leave off the –machinefile option).

NOTE - On Julia before 0.5.x even local runs open ports which can execute code on your Internet facing interface!! Julia 0.5.0 both allows you to control this behaviour and uses a shared cookie to protect you.

Unfortunately, when we do this between nodes on Legion, it spews a long list of communication errors, almost certainly to do with how locked down SSH is between nodes to prevent users interfering with each other’s jobs.

Interestingly, when we manually add processes from within a Julia script using addprocs() we don’t get these errors.

Investigation in a dev environment shows that this is due to issues with SSH tunnelling and whether local ports are restricted to the loopback interface or not in multi-node jobs. There doesn’t appear from the documentation to be a way to control this behaviour either by environment variables or command line arguments to julia.

Since to write a Julia equivalent to GERun we need to script anyway, we can do this in Julia and add the processes at this stage, before modifying ARGS and including the user’s script to run it.

There are some downsides here:

- Memory space pollution - we are writing variables that the user might use (so I’ve prefixed everything with GE_)

- Modifying ARGS causes Julia to throw a warning because it’s part of the default environment (this can safely be ignored).

- I don’t know much Julia (the only other program I’ve written is the PI example).

So I wrote it. It is deployed as gejulia on Legion and Grace as part of GERun and you can find the code here.

You invoke it instead of julia, so in your job script you would run:

$ gejulia <yourscript> <args>

Some things I (re-)learned:

- SSH is really, really locked down on Legion + Grace which can make diagnosing problems difficult.

- It’s better to detect whether processes are starting on the local node and use the local version of addprocs if they are.

Here is a modified version of the PI example which also gets each worker to print its hostname running on the Legion dev environment:

Grid Engine Julia parallel launcher abstraction layer version i (public)

Dr Owain Kenway, RCFS, RITS, ISD, UCL, 10th of October, 2016

For licensing terms, see LICENSE.txt

Detected 16 slots.

Machinefile should be /tmpdir/job/1057.undefined/machines

Machinefile located.

Any["node-x02f-022"; "node-x02f-022"; "node-x02f-022"; "node-x02f-022"; "node-x02f-022"; "node-x02f-022"; "node-x02f-022"; "node-x02f-022"; "node-x02f-022"; "node-x02f-022"; "node-x02f-022"; "node-x02f-022"; "node-x02f-021"; "node-x02f-021"; "node-x02f-021"; "node-x02f-021"]

Adding local worker: node-x02f-022

Adding local worker: node-x02f-022

Adding local worker: node-x02f-022

Adding local worker: node-x02f-022

Adding local worker: node-x02f-022

Adding local worker: node-x02f-022

Adding local worker: node-x02f-022

Adding local worker: node-x02f-022

Adding local worker: node-x02f-022

Adding local worker: node-x02f-022

Adding local worker: node-x02f-022

Adding local worker: node-x02f-022

Adding remote worker: node-x02f-021

Adding remote worker: node-x02f-021

Adding remote worker: node-x02f-021

Adding remote worker: node-x02f-021

Calculating PI using:

10000000000 slices

16 worker(s)

From worker 17: node-x02f-021

From worker 16: node-x02f-021

From worker 14: node-x02f-021

From worker 15: node-x02f-021

From worker 10: node-x02f-022

From worker 7: node-x02f-022

From worker 6: node-x02f-022

From worker 8: node-x02f-022

From worker 5: node-x02f-022

From worker 4: node-x02f-022

From worker 11: node-x02f-022

From worker 12: node-x02f-022

From worker 9: node-x02f-022

From worker 13: node-x02f-022

From worker 3: node-x02f-022

From worker 2: node-x02f-022

Obtained value of PI: 3.1415926535898557

Time taken: 8.319686889648438 seconds

And the job script that ran it:

#!/bin/bash -l

#$ -pe mpi 16

#$ -l h_rt=0:15:0

#$ -l mem=1G

#$ -wd /home/uccaoke/Scratch/output

#$ -N MPIJulia-default

#$ -ac exclusive

module load julia/0.5.0

gejulia /home/uccaoke/src/julia/pi_hostname.jl 10000000000